After implementing HTTP/2 in Haskell and TLS 1.3 in Haskell, I have been working for IETF QUIC. This article explains what I have done in 2019 fiscal year of Japan to report our sponsor, Internet Initiative Japan (IIJ). I have both titles of IIJ and IIJ-II. I'm wearing an IIJ-II hat in this article.

If you wonder why I'm using Haskell to implement network protocols, please give a look at my position paper for NetPL 2017. In short, I love its strong and rich type system and concurrency based on lightweight threads (green threads).

This article mainly describes the server side because it is more challenging than the client side.

APIs

To implement APIs for QUIC servers, I started with the accept-then-fork style inspired by Berkeley socket APIs:

withQUICServer :: ServerConfig -> (QUICServer -> IO ()) -> IO () accept :: QUICServer -> IO Connection close :: Connection -> IO ()

A toy server code to execute server :: Connection -> IO () in a lightweight thread is as follows:

withQUICServer conf $ \qs -> forever $ do conn <- accept qs void $ forkFinally (server conn) (\_ -> close conn)

It appeared that my test server (mew.org:4433) based on this APIs stacked occasionally. First I suspected buffer overruns and illegal UDP packets. So, I set exception handlers everywhere but no exception was caught. I checked every code of underlying libraries and found a careless bug but it was not the source of this problem. At this moment, I ran out of ideas.

After taking a deep breath, I squeezed the print code everywehere to try to unstarstand what's going on. Printing was less smooth than I expected and I realized that the source of this problem is this API itself. accept was processed in the main thread. So, if accept processing the handshake stacks, everything stacks. This experience led to simpler APIs:

runQUICServer :: ServerConfig -> (Connection -> IO ()) -> IO ()

There is no intermediate data type (QUICServer) anymore. The high order function (the loan pattern in OOP terminology) ensures that the handshake is processed in a spawned thread and Connection is closed finally.

QUIC multiplexes streams in a connection. To send and receive data, the following APIs are provided at this moment:

recvStream :: Connection -> IO (StreamID, ByteString) sendStream :: Connection -> StreamID -> Fin -> ByteString -> IO () shutdownStream :: Connection -> StreamID -> IO ()

You can find the current APIs in Network.QUIC.

TLS handshake

TLS stands for Transport Layer Security. But QUIC uses TLS 1.3 as data structures, not as transport. This means that the QUIC frames on UDP convey the TLS handshake messages without the TLS record layer. Encryption/decryption are carried out by QUIC itself, not by TLS.

To separate the TLS data types from its record layer and transport, I first divided many functions of the server and client code in the TLS library in Haskell and introduced static-function APIs for QUIC. A QUIC client with this APIs succeeded in communicating ngtcp2 server. However, this approach had the following drawbacks:

- APIs only cover limited cases. To cover all cases including hello retry request, resumption and new session ticket, more APIs should be provided.

- Modifications are too drastic. When some code are merged into the client and server code in the master branch, I need to do the division again. (How many time did I rework?)

Olivier Chéron, another maintainer of the TLS library, hesitated to merge my modification and suggested me to introduce a flexible record layer. This motivated me to explore another approach based on lightweight threads. My conclusion of the record layer structure is as follows:

data RecordLayer = RecordLayer { encodeRecord :: Record Plaintext -> IO (Either TLSError ByteString) , sendBytes :: ByteString -> IO () , recvRecord :: IO (Either TLSError (Record Plaintext)) }

Executing a TLS thread with a transparent record layer (no encryption/decryption and no I/O), we can obtain TLS handshake messages itself. The TLS thread can be controlled by the following APIs:

newQUICServer :: ServerParams -> IO ServerController type ServerController = ServerControl -> IO ServerStatus data ServerControl = PutClientHello ClientHello -- SendRequestRetry, SendServerHello, ServerNeedsMore | GetServerFinished -- SendServerFinished | PutClientFinished Finished -- SendSessionTicket, ServerNeedsMore | ExitServer -- ServerHandshakeDone data ServerStatus = ServerNeedsMore | SendRequestRetry ServerHello | SendServerHello ServerHello [ExtensionRaw] (Maybe EarlySecretInfo) HandshakeSecretInfo | SendServerFinished Finished ApplicationSecretInfo | SendSessionTicket SessionTicket | ServerHandshakeDone

With this APIs, all cases are covered with a little modification in the client and server code. The stability has been checked with many QUIC implementations. The usage of this APIs can be found in Network.QUIC.Handshake.

One long-standing issue is the timing to terminate the TLS thread for clients. After sending Client Finished to a server, the client waits for New Session Ticket (NST). However, some servers do not send NST.

QUIC draft 25 introduced the HANDSHAKE_DONE frame which is sent from servers to clients. Thanks to this, the main thread of the QUIC client can now terminate the TLS thread when HANDSHAKE_DONE is received. During the inter-operability test for draft 25, I noticed that the ngtcp2 server sends NST in the CRYPTO frame after HANDSHAKE_DONE. So, I changed the Haskell QUIC server to wait for a period after receiving HANDSHAKE_DONE hoping that NST will be also received during the period.

The server architecture

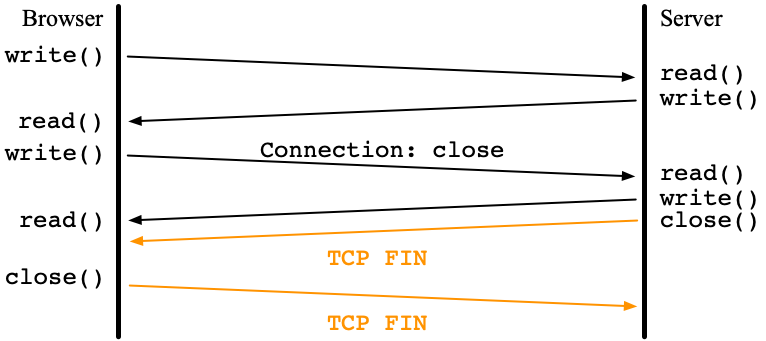

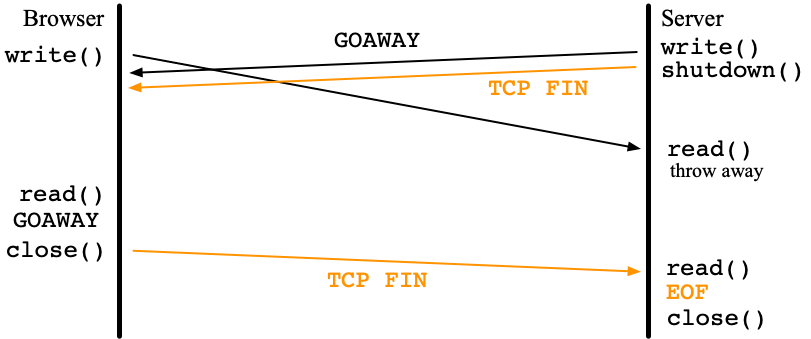

runQUICServer first spawns a Dispatcher thread for each network interface specified in ServerConfig. Each Dispatcher manages one wildcard socket, {UDP, local-addr, local-port, *, *}. After receiving an Initial packet from a wildcard socket, a connected socket, {UDP, local-addr, local-port, remote-addr, remote-port}, is created based on peer's address. For this connected socket, several threads are spawns to maintain Connection as illustrated in Fig 1:

- Launcher: a thread to make a new

Connectionand launch user server code (Connection -> IO ()) specified torunQUICServer.recvStreampops incoming data fromInputQandsendStreampushes outgoing data toOutputQ. - TLS: a thread for TLS handshake. It gets TLS handshake messages as

ServerControland gives back TLS handshake message asServerStatus. This thread is terminated when the TLS handshake is completed. - Reader: a thread to read data from the connected socket and pass them to Receiver via

RecvQ. - Receiver: a thread to read data from

RecvQand decrypt them. It passesCRYPTOandSTREAMframes to Launcher and processes control frames such asACKandPING. For instance, whenACKframes are received, corresponding packets are removed fromRetransDB. - Sender: a thread to read data from

outputQand encrypt-then-send them to the connected socket. It also saves original plain packets toRetransDB. - Resender: a thread to pops packets from

RetransDBand pushes them toOutputQrepeatedly.

Reader and Receiver

Processing incoming QUIC packets is two-pass: decoding and decryption. This separation made the code drastically simpler. Reader decodes the unprotected part of the header using the following function:

decodeCryptPackets :: ByteString -> IO [CryptPacket]

Note that this function does not take the Connection argument. CryptPacket is defined as follows:

data CryptPacket = CryptPacket Header Crypt data Header = Initial Version CID CID Token | RTT0 Version CID CID | Handshake Version CID CID | Short CID data Crypt = Crypt { cryptPktNumOffset :: Int , cryptPacket :: ByteString }

CryptPacket is passed to Receiver via RecvQ:

newtype RecvQ = RecvQ (TQueue CryptPacket)

It is Receiver's responsibility to decrypt the protected part of the header and the encrypted body using the following function:

decryptCrypt :: Connection -> Crypt -> EncryptionLevel -> IO (Maybe Plain)

decryptCrypt takes Connection as an argument since Connection holds secrets. Plain is defined as follows:

data Plain = Plain { plainFlags :: Flags Raw , plainPacketNumber :: PacketNumber , plainFrames :: [Frame] }

Sender and Resender

Unlike the incoming packet processing, the outgoing packet processing is one-pass:

encodePlainPacket :: Connection -> PlainPacket -> Maybe Int -> IO [ByteString]

The third argument controls Padding. If Just n is specified, padding is generated so that the size of the result packet is just n. Otherwise, no padding is used. PlainPacket is defined as follows:

data PlainPacket = PlainPacket Header Plain

Sender keeps PlainPackets to RetransDB while Resender obtains PlainPackets from RetransDB and enqueues them to OutputQ again.

Dispachers

Dispatchers carry out the following jobs:

- Passing information for new connections

- Handling retry and version negotiation packets

- Handling migration and NAT rebiding

- Combining fragmented Initial packets

Dispatchers decode incoming packets using the following function:

decodePackets :: ByteString -> IO [PacketI]

PacketI is defined as follows:

data PacketI = PacketIV VersionNegotiationPacket | PacketIR RetryPacket | PacketIC CryptPacket | PacketIB BrokenPacket

This data type captures that version negotiation packets and retry packets are not encrypted. VersionNegotiationPacket and RetryPacket should be received by clients only. And servers should receive CryptPacket only. For instance, if a server receives VersionNegotiationPacket, the server ignores it.

New connections

A Dispatcher maintains a dictionary for Connection. The keys are destination connection IDs and values are a pair of Connection and MigrationQ described later.

If a version of Initial CryptPacket is known, it checks the Connection dictionary to see if the destination connection ID is stored. If not stored, it prepares for a new Connection. A new RecvQ is created and the Initial packet are pushed into it. And information to create a Connection including the RecvQ and peer's address/port is queued into so-called accepting queue. The destination connection ID is not registered to the Connection dictionary at this moment to prevent the Initial flooding attack.

The main thread repeatedly spawns Launcher. It accepts the information and tries to make a new Connection. Recall that Reader and Sender use a connected socket while Dispatcher holds a wildcard socket. How we can make a connected socket for a new Connection safely?

Suppose that a wildcard socket, {UDP, 192.0.2.1, 4433, *, *}, exists and peer's address/port is 203.0.113.0:3456. A socket, {UDP, 192.0.2.1, 4433, 203.0.113.0, 3456} should be created without errors nor race condition. My first attempt is as follows:

- Create a new UDP socket with

SO_REUSEADDR - Bind it to 192.0.2.1:443

- Connect it to 203.0.113.0:3456

Unfortunately, BSD variants reject (2). Linux accepts (2) but race condition would happen. Kazuho Oku, one of the quicly maintainers suggested me using an ANY address in (2). The improved process is as follows:

- Create a new UDP socket with

SO_REUSEADDR - Bind it to *:443

- Connect it to 203.0.113.0:3456. This also binds the local address to 192.0.2.1.

This process succeeds even on BSD variants and there is no race conditions on any platforms.

After a connected socket is created and TLS handshake is done through the socket, Launcher registers the Connection to the dictionary.

Retry and version negotiation

When a version in a CryptPacket is unknown (for instance, a grease value), Dispatcher send a version negotiation packet.

If no token is contained in an Initial CryptPacket and Connection is not found in the dictionary but the server configuration requires retry, it sends a Retry packet.

If a valid token is provided, a new connection is created as described in the previous subsection.

Migration or NAT rebiding

When a client moves to a new address/port or the client port is changed due to NAT rebinding, Dispatcher receives Short CryptPackets from its wildcard socket. Their destination connection ID are found in the Connection dictionary. In this case, Dispatcher creates MigrationQ, registers it to the dictionary and spawns Migrator. After that, Dispatcher enqueues this first migration packet and other migration packets, if any, to MigrationQ.

Migrator creates a new connected socket for the new address/port and spawns another Reader. Then Migrator tells the new socket to Sender, asks Sender to send PathChallenge and sleeps until Reader receives PathResponse. Then Migrator reads packets from MigrationQ and enqueues them to RecvQ. After a period, Migrator terminates the old Reader.

Fragmented Initial packets

In normal cases, a client sends one Initial packet when it tries to make a new connection. However, if a client wants to send a large Client Hello message, it is divided into multiple Initial packets.

My old implementation naively handles them. Let's consider the case of two Initial packets. When the first packet arrives, a wildcard socket catches it. A connected socket is created and the packet is acknowledged. Then the second packet is also caught by the wildcard socket. Creating another connected socket for the peer fails due to the same parameters. Since the second packet is not acknowledged, the client resends the second packet. The connected socket captures it this time. So, a new connection can be created.

The advantage of this approach is that Dispatcher does not maintain any information. The disadvantage is that this logic does not work on Linux. To my surprise, connect(2) succeeds even for the same parameters! This results in unexpected behavior.

So, I gave up the zero-cost approach and introduced another dictionary of a fixed size. The keys are original destination connection IDs while the values are RecvQ. An entry is created when the first Initial packet arrives. Then succeeding Initial packets are queued to RecvQ found in the dictionary.

I used Priority Search Queue (PSQ) to make this fixed-size dictionary. Choosing time as priority implements FIFO. The combination of size, deleteMin and insert implements fixed-size.

Final remark

My code is available from the repository on github. You can see the result of 16th inter-operability test event. A lot of works are still left. I will tackle HTTP/3 and QPACK in March 2020.

The contents of this article is just a snapshot and the code would be substantially changed during the development. So, I should write another article to update the contents when the quic library in Haskell is released.

I thank all people, especially IETF QUIC WG guys, who helped me.